This comprehensive guide provides systematic approaches to identify and resolve decreasing recognition accuracy in AI-powered waste sorting systems. We explore the complex interactions between hardware, software, environmental factors, and material variations that can impact machine performance. Through step-by-step diagnostic procedures and practical recovery strategies, operators can restore optimal functionality and implement preventive measures to maintain consistent sorting accuracy in industrial recycling applications.

Establishing Baseline Monitoring and Early Warning Systems for AI Sorting Performance

Effective management of AI sorting systems begins with comprehensive performance monitoring that can detect even minor accuracy degradations before they become significant operational issues. A well-designed monitoring framework provides valuable data trails for troubleshooting while enabling proactive maintenance interventions. Early warning systems should encompass multiple performance dimensions, including recognition rates, false rejection percentages, and missed contamination incidents, creating a holistic view of system health. Regular performance validation using standardized testing materials helps distinguish between model degradation and normal operational variations, ensuring that maintenance resources are deployed effectively.

Defining Key Performance Indicators and Setting Appropriate Alert Thresholds

Establishing clear key performance indicators forms the foundation of effective AI sorter management, with metrics including overall recognition accuracy, material-specific identification rates, false positive rates, and false negative rates. Each KPI requires carefully calibrated alert thresholds that trigger investigations when performance deviates beyond acceptable parameters, typically with yellow warnings at 5% degradation and red alerts at 10% deviation from baseline. These thresholds should reflect both operational requirements and statistical significance, avoiding unnecessary alarms while ensuring genuine issues are promptly addressed. Regular review of these metrics enables continuous refinement of the monitoring system based on historical performance data and evolving operational priorities.

Utilizing Sorting Data Dashboards for Real-Time Performance Tracking

Modern AI sorting systems generate extensive operational data that can be visualized through comprehensive dashboards, providing immediate insight into system performance through trend analysis, material classification statistics, and anomaly detection. These interfaces enable operators to quickly identify patterns such as declining accuracy with specific material types or gradual performance drift across all categories. Advanced dashboard systems can correlate performance metrics with operational parameters like throughput rates and material composition, helping identify root causes of accuracy issues. The implementation of real-time monitoring has been shown to reduce problem resolution times by up to 40% compared to manual data collection and analysis methods.

Establishing Standard Procedures for Regular Performance Calibration and Verification

Regular calibration using standardized test materials provides an objective performance baseline by eliminating variables introduced by normal feedstock variations, creating a controlled environment for accuracy assessment. This process involves running predetermined material samples through the sorting system and comparing actual performance against expected results, with discrepancies triggering detailed investigation. Industry best practices recommend weekly calibration checks for critical applications, with comprehensive validation monthly. Documentation of calibration results creates valuable historical data for tracking long-term performance trends and identifying gradual degradation that might otherwise go unnoticed during daily operations.

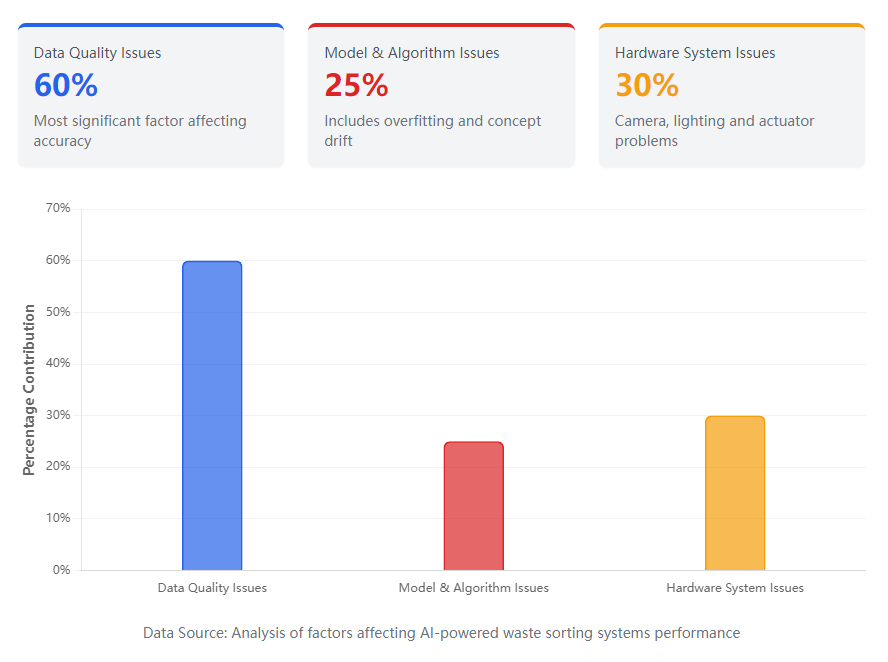

Data Quality Diagnosis: Examining the AI Model's Nutritional Source

AI recognition accuracy fundamentally depends on the quality and relevance of data used for both training and real-time operation, making data quality assessment the logical starting point for accuracy troubleshooting. Common data-related issues include insufficient representation of material variations, image quality degradation, and distribution shifts in incoming materials that differ from original training conditions. Methodical data analysis can identify whether accuracy issues stem from the AI model itself or from changes in the operational environment and material stream characteristics. Comprehensive data diagnosis typically resolves approximately 60% of AI accuracy problems without requiring model retraining or hardware modifications.

Analyzing Training Dataset Representativeness and Diversity

The original training dataset must adequately represent all material variations encountered in actual operations, including different colors, shapes, sizes, orientations, and surface conditions that affect visual appearance. Insufficient diversity in training data creates blind spots where the AI model cannot reliably identify materials that differ significantly from examples in its training set. Analysis should verify coverage across all expected material categories and conditions, with particular attention to low-frequency but high-importance materials that might be underrepresented. Regular training data audits help identify "data drift" where evolving material streams gradually diverge from the original training dataset, creating accuracy gaps.

Identifying Image Quality Issues in Real-Time Data Streams

Real-time image quality directly determines recognition capability, with common issues including motion blur from incorrect shutter settings, overexposure or underexposure from lighting problems, and occlusion from material overlapping. Systematic image analysis should examine sharpness, contrast, noise levels, and consistency across the entire detection area, identifying any factors that might prevent the AI from extracting reliable features. Industrial studies indicate that image quality issues account for approximately 25% of AI recognition problems, often resolved through simple adjustments to camera settings, lighting intensity, or material presentation. Regular image quality monitoring provides early warning of developing issues before they significantly impact sorting accuracy.

Detecting Data Distribution Shifts Caused by Material Variations

Statistical comparison between historical and current material data distributions can reveal significant shifts that degrade AI performance, such as new packaging materials with different reflective properties or changes in contamination profiles. These distribution changes may affect color characteristics, texture patterns, or shape distributions, creating mismatches between the AI model's expectations and actual material appearances. Advanced monitoring systems can automatically flag distribution shifts exceeding predetermined thresholds, triggering investigation and potential model updates. Early detection of distribution changes enables proactive model maintenance rather than reactive troubleshooting after accuracy has significantly declined.

Evaluating Accuracy and Consistency in Data Labeling

In systems employing continuous learning, the quality of correction labels provided by operators directly impacts future model performance, with inconsistent or incorrect labels gradually degrading recognition capability. Label quality assessment should examine both accuracy relative to material ground truth and consistency across different operators and shifts, identifying any systematic labeling errors. Standardized labeling procedures, regular training, and quality control checks help maintain label quality, while automated validation can flag potentially problematic corrections for review. High-quality labeling ensures that continuous learning improves rather than degrades model performance over time.

AI Model and Algorithm Investigation: Examining the System's Intelligence Core

When data quality issues have been eliminated as potential causes, investigation should focus on the AI model and algorithms themselves, which may suffer from overfitting, concept drift, or configuration problems. Model analysis requires more specialized knowledge but follows methodical procedures that can identify common issues without requiring deep machine learning expertise. Understanding the relationship between model configuration and operational performance enables targeted adjustments that restore accuracy without compromising other aspects of system functionality. Comprehensive model assessment typically resolves an additional 25% of AI accuracy issues that persist after data quality optimization.

Identifying Signs of Model Overfitting or Underfitting

Overfitting appears as excellent performance on training materials but poor generalization to new samples, while underfitting manifests as consistently low accuracy across all material types regardless of training duration. Analysis of performance disparities between training evaluation metrics and operational results can indicate overfitting, while persistently low accuracy despite adequate training suggests underfitting. Resolution strategies include increasing training data diversity for overfitted models or enhancing model complexity for underfitted systems, with the specific approach depending on the root cause diagnosis. Regular validation using separate test datasets helps detect developing overfitting before it significantly impacts operational performance.

Detecting Model Degradation and Concept Drift Phenomena

Concept drift occurs when material characteristics gradually change over time, causing the relationship between visual features and material identity to evolve beyond the original model's understanding. This gradual degradation differs from sudden failures, typically manifesting as slowly declining accuracy across multiple material categories rather than complete failure with specific items. Statistical process control methods applied to accuracy metrics can detect subtle drift patterns, triggering model updates before performance falls below acceptable levels. Modern AI systems can automatically monitor for concept drift and flag when retraining becomes necessary based on predetermined performance thresholds.

Analyzing Algorithm Parameters and Confidence Threshold Settings

Confidence threshold configuration directly impacts the balance between false rejections and missed contaminants, with thresholds that are too high causing excessive good material loss and thresholds that are too low allowing contamination through. Optimal threshold settings depend on specific operational priorities, with premium quality applications typically requiring higher confidence levels than throughput-focused operations. Methodical testing across a range of threshold values helps identify optimal settings for current conditions, with adjustments needed as material streams or operational priorities change. Regular threshold optimization can improve overall system performance by 5-15% without requiring model changes or hardware modifications.

Software Version and Dependency Library Compatibility Verification

Software updates, whether to the sorting application itself or underlying dependencies, can introduce compatibility issues that subtly impact recognition accuracy without causing complete system failure. Maintaining detailed update logs enables correlation between software changes and accuracy deviations, helping identify problematic updates that should be rolled back or modified. Comprehensive testing protocols should validate system performance following any software change, with particular attention to edge cases and previously reliable recognition scenarios. Version control practices that maintain the ability to revert to previous software states provide valuable safety nets when updates introduce unexpected accuracy issues.

Hardware System Inspection: Ensuring the Health of AI Perception and Execution Components

AI algorithms depend on accurate sensor data and precise actuator performance, making hardware issues a frequent contributor to recognition accuracy problems that are often misdiagnosed as software issues. Comprehensive hardware assessment should examine cameras, lighting, ejection systems, and computational resources, identifying any components that have degraded beyond acceptable tolerances. Systematic hardware maintenance prevents many accuracy issues while ensuring that identified problems receive appropriate hardware-focused solutions rather than ineffective software adjustments. Industry data suggests that hardware issues account for approximately 30% of accuracy problems in mature sorting systems.

Industrial Camera and Optical Lens Condition Assessment

Camera systems require regular inspection for sensor defects, lens contamination, focus accuracy, and calibration integrity, with even minor issues potentially significantly impacting image quality and recognition capability. Standard assessment procedures include resolution testing, color accuracy verification, and automatic focus performance evaluation, with documented results enabling trend analysis and predictive maintenance. Preventative maintenance schedules should include regular lens cleaning, calibration checks, and periodic replacement based on manufacturer recommendations and operational history. Well-maintained camera systems typically maintain consistent performance for 15,000-20,000 operational hours before requiring significant refurbishment or replacement.

Lighting System Stability and Uniformity Evaluation

Consistent, uniform illumination forms the foundation of reliable machine vision, with lighting issues representing one of the most common hardware-related causes of recognition accuracy problems. Comprehensive lighting assessment should examine intensity stability, color temperature consistency, spatial uniformity, and temporal stability, identifying any degradation from original specifications. LED lighting systems typically degrade gradually over 10,000-15,000 hours of operation, with intensity reduction of 10-20% potentially impacting recognition accuracy enough to require adjustment or replacement. Regular lighting system maintenance includes cleaning of diffusers and reflectors, verification of power supply stability, and scheduled replacement before degradation impacts operational performance.

Pneumatic Ejection Valve and Mechanical Actuator Response Testing

Even perfect recognition becomes ineffective without precise ejection, making regular actuator testing essential for maintaining overall system accuracy through verification of response time, positioning accuracy, and consistency. Pneumatic systems require particular attention to air pressure stability, valve response characteristics, and nozzle condition, with performance deviations indicating maintenance needs before complete failure occurs. Mechanical actuation systems need verification of positioning repeatability, speed consistency, and mechanical wear that might impact ejection precision. Comprehensive actuator testing should occur during preventive maintenance cycles and following any accuracy issues persisting after recognition system verification.

Computational Unit Performance Monitoring and Thermal Management

AI recognition requires substantial computational resources, with performance degradation potentially resulting from thermal throttling, resource contention, or hardware failures in processing components. Continuous monitoring of processor utilization, memory availability, and storage performance helps identify resource limitations before they impact recognition speed or accuracy. Thermal management verification ensures adequate cooling capacity maintains components within specified temperature ranges, preventing performance degradation associated with overheating. Modern AI sorting systems typically incorporate comprehensive system health monitoring that alerts operators to developing computational issues before they impact sorting accuracy.

Environmental and Operational Factor Analysis: Identifying External Influences

Industrial sorting environments present numerous challenges not encountered in laboratory conditions, with vibrations, temperature variations, humidity, and operational practices potentially impacting system accuracy. Environmental analysis examines factors that indirectly affect recognition performance through their influence on material presentation, sensor operation, or system stability. Operational factors including material handling practices and operator interventions can similarly impact accuracy in ways that mimic system degradation. Comprehensive assessment of these external factors resolves many accuracy issues that persist after hardware and software verification.

Impact of Environmental Vibration on Image Acquisition Stability

Mechanical vibrations from nearby equipment or structural transmission can cause image blurring, particularly with high-resolution cameras or longer exposure times required for certain material types. Vibration assessment should examine both frequency and amplitude characteristics, identifying whether mitigation requires equipment repositioning, isolation mounting, or acquisition parameter adjustments. Modern sorting systems often incorporate vibration monitoring that correlates vibration levels with recognition accuracy, helping identify threshold levels that require intervention. Effective vibration control typically improves recognition accuracy by 3-8% in environments with significant mechanical vibration sources.

Effects of Temperature and Humidity on Electronic and Optical Components

Temperature and humidity extremes can affect camera performance, lens characteristics, lighting output, and electronic component operation, creating recognition issues that vary with environmental conditions. Monitoring should track environmental parameters alongside accuracy metrics, identifying correlations that indicate environmental sensitivity requiring mitigation through enclosure modifications or operational adjustments. Optical components particularly require protection from condensation, which can form during rapid temperature changes in high-humidity environments. Proper environmental control typically maintains recognition accuracy within 2% of laboratory performance across expected operational condition ranges.

Analysis of Feeding Uniformity and Material Layer Thickness Variations

Inconsistent material presentation creates recognition challenges by altering apparent size, shape, and orientation, while overlapping materials prevent complete feature extraction. Feeding system analysis should examine distribution uniformity, layer thickness consistency, and material orientation patterns, identifying any presentation issues that might impact recognition reliability. Optimization of vibratory feeders, conveyor speeds, and distribution systems can significantly improve presentation consistency, subsequently enhancing recognition accuracy. Well-designed feeding systems typically maintain layer thickness variations below 15% and prevent more than 5% material overlap, providing optimal conditions for AI recognition.

Standardization Review of Operator Interactions and Parameter Changes

Inconsistent operator practices or unauthorized parameter modifications can degrade system performance, particularly in systems employing continuous learning where operator corrections directly influence future recognition. Detailed activity logging helps identify practices that might impact accuracy, such as frequent manual overrides, inconsistent correction labeling, or unapproved parameter adjustments. Standardized operating procedures, comprehensive training, and access controls help maintain consistent operations that support rather than undermine AI performance. Systems with well-documented procedures and trained operators typically experience 40% fewer operational-related accuracy issues compared to less structured environments.

Systematic Recovery and Preventive Maintenance Strategies

Effective accuracy restoration requires systematic approaches that address root causes rather than symptoms, followed by preventive measures that maintain performance over the long term. Recovery procedures must be tailored to specific diagnosis results, whether involving data collection, model retraining, hardware maintenance, or operational adjustments. Preventive strategies focus on eliminating common failure modes through scheduled maintenance, continuous monitoring, and knowledge capture that improves future troubleshooting efficiency. Comprehensive recovery and maintenance programs typically reduce accuracy-related downtime by 60-80% compared to reactive approaches.

Developing Targeted Recovery Procedures Based on Root Cause Analysis

Effective recovery begins with precise root cause identification, followed by implementation of specific corrective actions matched to the diagnosed issue, whether data-related, model-related, hardware-related, or operational. Data issues typically require expanded collection and careful labeling, model problems necessitate retraining with appropriate parameters, hardware issues demand cleaning, calibration or replacement, and operational issues require procedure updates and training. Each recovery action should include verification procedures that confirm successful resolution, with continued monitoring to ensure sustained improvement. Documented recovery procedures based on previous successful interventions accelerate future problem resolution while ensuring consistent approaches across different operators and shifts.

Establishing Closed-Loop Feedback Mechanisms for Continuous Model Learning

Continuous learning systems require carefully structured feedback mechanisms that improve rather than degrade model performance through accurate, consistent correction data from operational experience. Effective feedback loops incorporate quality control measures that validate correction labels before incorporation into training data, preventing erroneous learning from occasional operator mistakes. The feedback system should balance stability against adaptability, incorporating new information without forgetting previously learned material characteristics. Well-implemented continuous learning typically improves model accuracy by 0.5-1% monthly, gradually enhancing performance without requiring disruptive retraining campaigns.

Planning Preventive Maintenance Schedules and Inspection Checklists

Comprehensive preventive maintenance programs address common accuracy degradation sources before they impact operational performance, with scheduled activities ranging from daily cleaning to annual comprehensive inspections. Maintenance schedules should reflect component-specific reliability characteristics and criticality to system function, with higher-frequency attention to components like optical surfaces and ejection systems. Detailed checklists ensure consistent maintenance execution across different technicians and shifts, while documentation supports trend analysis and schedule optimization. Effective preventive maintenance typically reduces unexpected downtime by 70% and accuracy-related issues by 60% compared to reactive approaches.

Building Knowledge Bases and Creating Typical Fault Resolution Manuals

Systematic documentation of troubleshooting experiences, including symptoms, diagnostic procedures, root causes, and effective solutions, creates organizational knowledge that improves future problem-resolution efficiency. Knowledge bases should incorporate search capabilities that help technicians quickly find relevant historical cases, while standardized documentation formats ensure consistent information capture. Resolution manuals for common issues provide immediate guidance for frequent problems, reducing resolution time and ensuring consistent approaches. Organizations with comprehensive knowledge management typically resolve accuracy issues 50% faster than those relying on individual experience and memory. The implementation of these strategies for AI sorting systems represents a proactive approach to maintaining the sophisticated technology that underpins modern recycling operations, ensuring consistent performance through methodical maintenance and continuous improvement practices.